Action Flocking – Media Facades 2010 Festival

Action Flocking is all about tracking the movement of the people and visualising it in realtime. Action Flocking enables interaction with public spaces by moving in a monitored area. People’s movements are converted to animated visualisations using video surveillance camera and OiOi Collective’s Action Flocking software.

Action Flocking was first presented in the Media Facades 2010 Festival as a part of the festival program in three European cities: Helsinki, Berlin and Linz. In Linz it was also a part of the Ars Electronica 2010 festival. There was also a realtime connection between Helsinki and Berlin to create an interactive online playing experience.

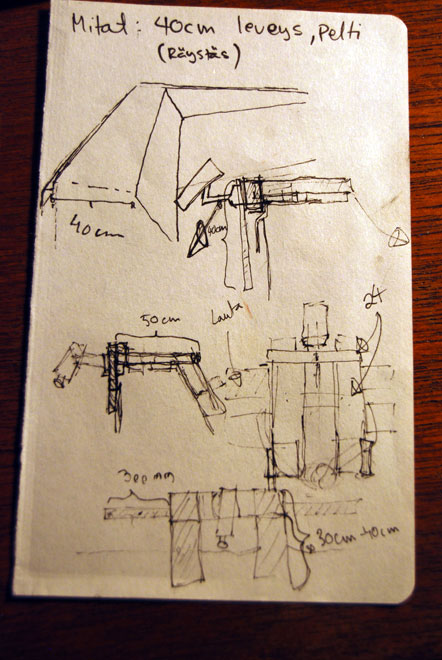

Building Action Flocking

We had a great time building the Action Flocking setup in Helsinki, Berlin and Linz. Here are some pictures we took during those times to show the different locations and hardware setups we dealt with.

Technical mumbo jumbo

Okay, so here’s some technical and nerdy stuff about the AF software and a tale about how it all came together. So, sit back, have a beer and try not to be bored too much.

Start of the Dance, Enter Flash

At first, the plan was to use Flash to capture, process and draw the image. It was a natural choice since I’ve got the most experience on Flash and ActionScript. Also, Flash includes many excellent tools for loading, analysing and displaying images asynchronously. The only thing that worried me was the performance. As you may know, Flash is not the fastest horse on track.

So, when the project was at a state where we had a ready concept, I got my hands dirty right away and started implementing it with Flash. The first problem was to get the video stream captured from the camera. We had bought an IP based surveillance camera, so there were two options: H.264 and MJPG. Either of these are supported by Flash API, so I had to figure something out. With a little search and testing, I found out that the H.264 stream has a bit of delay, probably from the heavy coding and decoding, and this is why the MJPG stream would be the weapon of choice. After all, we need a good response from the system.

The next step was then to find out how I could read MJPG stream into Flash. I googled the subject a bit and found out that the MJPG stream actually is just a series of regular JPG images put together in a row with some meta data between. Then I also managed to find a really good ready-made AS3 class made by Josh Chernoff that uses a simple method of searching the start and end bytes of a JPG image.

With the help of this class, I managed to get together a first tryout of the software. But as the software was running, it already consumed a quite amount of processing power without any graphics drawn. I came to a conclusion that maybe I should try something different than Flash, even if it would be possible to have a fairly good performance by harnessing some extra power with Pixel Bender and Alchemy.

Exit Flash, Enter Cinder

During those times I had bumped into this new interesting framework called Cinder made by The Barbarian Group. The framework is profiled for creative coding and the examples made with Cinder by Richard Hodgins are a really good proof of that. Go check them out in the Cinder website. (By the way, I saw his talk at Flash on the Beach 2010, and I must say the guy is so goddamn talented).

So I figured why not try to use Cinder. It would be a nice way to learn about this new framework and also refresh my C++ skills a bit. And so I did.

The first step was to implement the MJPG stream capture with C++. This turned out to be much, much more work than with Flash. I had to manually take care about the socket connections, threads and stuff that’s handled behind the scenes in Flash. Actually, even when I got the MJPG stream to work with a movie texture in Cinder (OpenGL), it was such a mess that I started to search for a simpler solution.

I then realised that I could use the inbuilt image load function of Cinder to load all the frames as individual images, and with only one line of code. I tested how fast the image load was and I was actually quite surprised that it was running at over 10 fps. Because this happens in a different thread than the actual drawing, it’s a sufficient rate for having a responsive enough system.

The Algorithm

So now I had the video stream on a movie texture in Cinder. Next I had to figure out how to actually detect the moving people in the picture. I pondered the subject for a while and started experimenting. Two possibilities that came to my mind at that point.

- Detect movement by subtracting the current frame from the previous frame

- Detect differences by subtracting an empty background of the current frame

The first option does not need any arranging or special setup, but it only detects movement that’s happening between two frames. It will not detect, for example, a person that has moved in to the picture but stays still.

The second option detects also this kind of difference, but it needs a reference image from an empty background for comparing. This means that the area needs to be emptied and the reference image taken before the algorithm can work properly. Also, if the lighting changes enough after the reference image has been taken, the algorithm will not work properly anymore.

I came to the conclusion that the option number two is the better for this project, since we want to detect also people on the area that are standing still and not moving.

Next, I had to implement this background subtraction by doing a threshold for the current frame and the reference frame and then subtracting them, ending up with a blank image with only changed pixels on. I then had to implement something called blob detection. This is also something I found out from the Internet. The blob detection basically finds out individual blobs from an image and, for example, their center points.

Now, there are numerous different ways of doing blob detection, but I stumbled upon this great algorithm called growing regions algorithm explained and implemented by Erik van Kempen. He explains really well how the algorithm works and he also provides a C++ source code, which was excellent for me because I could use it with only small modifications and without porting it to some different language.

Basically after the blob detection and dismissing too small blobs, I had a nice clean array of blob center points updating at a regular speed. This was the point where the nasty background work had been done and the actual creative work was ready to be started.

So, I started to fool around with OpenGL, on which I had just a little experience before, but it was a great fun to learn it, test stuff and come up with different visualisations and finally make them work really smoothly at 25 fps. We constantly improved the graphics almost every night we were on the road in Europe.

As a part of our performance we also managed to build a working connection between Helsinki and Berlin on a specific night. It allowed both cities to collaboratively paint a shared canvas. Basically all it required was a TCP connection between the computers running AF software and sending blob coordinate data between them.

Everything about building the software and overcoming obstacles was totally fun and it got us to a point from where it will be good to continue the project and take it to a next level in some different project.